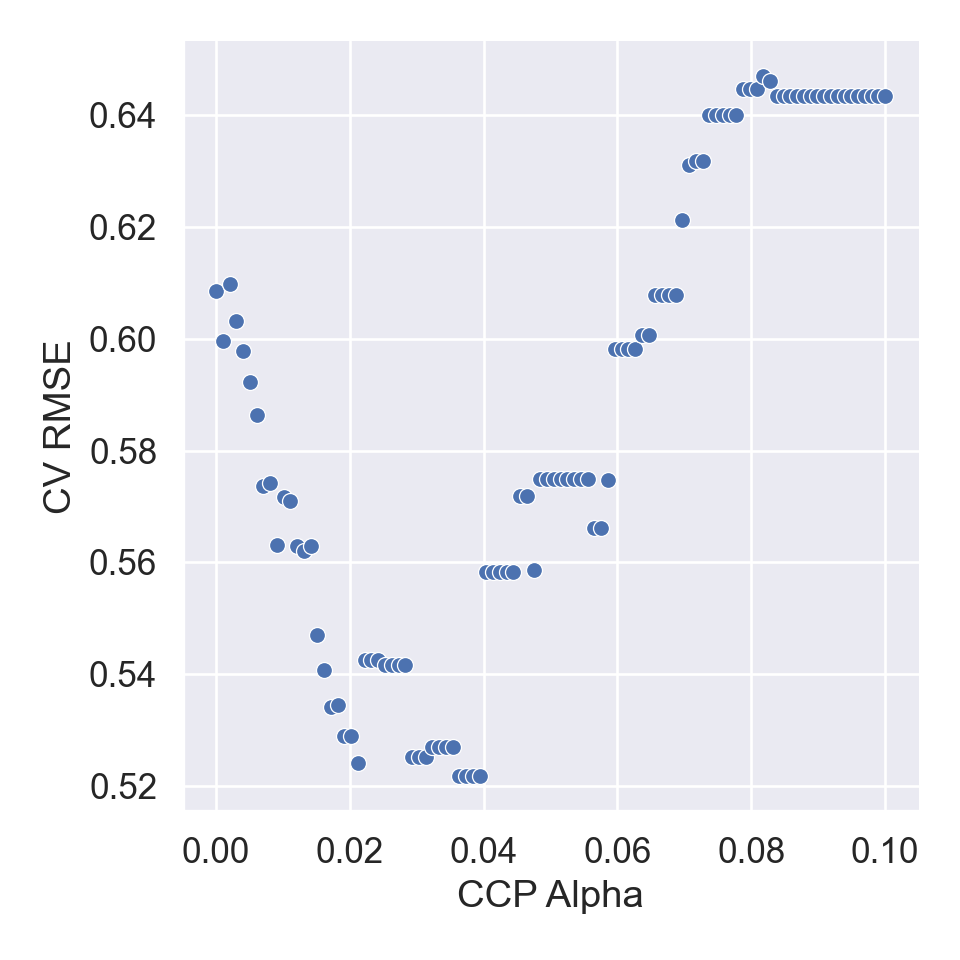

{'model__ccp_alpha': array([0. , 0.0010101 , 0.0020202 , 0.0030303 , 0.0040404 ,

0.00505051, 0.00606061, 0.00707071, 0.00808081, 0.00909091,

0.01010101, 0.01111111, 0.01212121, 0.01313131, 0.01414141,

0.01515152, 0.01616162, 0.01717172, 0.01818182, 0.01919192,

0.02020202, 0.02121212, 0.02222222, 0.02323232, 0.02424242,

0.02525253, 0.02626263, 0.02727273, 0.02828283, 0.02929293,

0.03030303, 0.03131313, 0.03232323, 0.03333333, 0.03434343,

0.03535354, 0.03636364, 0.03737374, 0.03838384, 0.03939394,

0.04040404, 0.04141414, 0.04242424, 0.04343434, 0.04444444,

0.04545455, 0.04646465, 0.04747475, 0.04848485, 0.04949495,

0.05050505, 0.05151515, 0.05252525, 0.05353535, 0.05454545,

0.05555556, 0.05656566, 0.05757576, 0.05858586, 0.05959596,

0.06060606, 0.06161616, 0.06262626, 0.06363636, 0.06464646,

0.06565657, 0.06666667, 0.06767677, 0.06868687, 0.06969697,

0.07070707, 0.07171717, 0.07272727, 0.07373737, 0.07474747,

0.07575758, 0.07676768, 0.07777778, 0.07878788, 0.07979798,

0.08080808, 0.08181818, 0.08282828, 0.08383838, 0.08484848,

0.08585859, 0.08686869, 0.08787879, 0.08888889, 0.08989899,

0.09090909, 0.09191919, 0.09292929, 0.09393939, 0.09494949,

0.0959596 , 0.0969697 , 0.0979798 , 0.0989899 , 0.1 ])}